YouTube Isn’t the Only Platform With a Pedophilia Problem

When platforms care only about engagement, kids suffer.

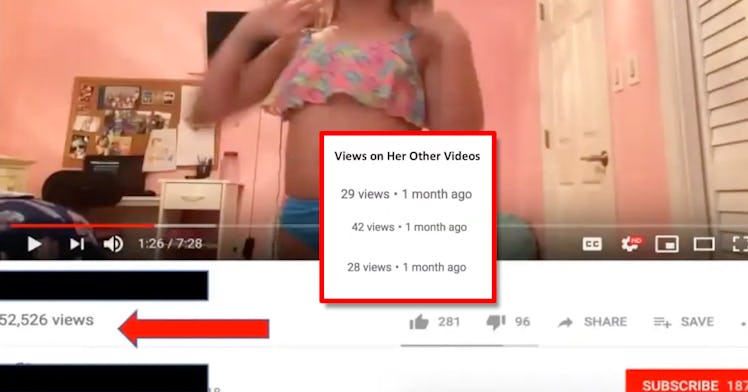

Last week, YouTube was in the news yet again after user Matt Watson hit the front page of Reddit with a video explaining a phenomenon he had discovered while on the video-sharing platform. Watson found that within a few clicks of normal, appropriate, and monetized (ad-sponsored) videos one could enter a wormhole of inappropriate, eroticized clips of girls as young as nine years old. In some instances, these videos were monetized, timestamped to flag suggestive moments in the videos, or had private, unlisted links that led invited users to view other pornography, sometimes of children. Reaction was swift. Such advertisers as AT&T and Epic Games quickly froze business on YouTube, which is owned by Google. The company promised to step it up with content and comment moderation by both human moderators and artificial intelligence. But it seems the same story keeps getting told: In 2017, YouTube dealt with the same problem, announced a slew of new moderation regulations, and declared the problem handled. It wasn’t.

But the sexual exploitation problem — particularly regarding children — is not just limited to YouTube. The National Center for Sexual Exploitation (NCOSE) puts together a yearly list of mainstream organizations that facilitate this exploitation dubbed the Dirty Dozen. On this year’s list? Twitter, Amazon, United Airlines, the gaming platform Steam, and more. To find out more, Fatherly talked to Haley Halverson, NCOSE’s Vice President who works on the Dirty Dozen list every year. Halverson spoke about how pervasive the problem of the sexual exploitation of kids is on the Internet, how even “safe” platforms are problematic, what can be done, and why certain companies will only take measures to rid these videos when they’re told.

Tell me about the Dirty Dozen list. How long NCOSE been putting it together?

The Dirty Dozen list started in 2013. Since then, it’s been an annual list. It’s 12 different targets. One of the requirements is that the companies listed are typically mainstream players in America — whether they’re a corporation or occasionally we’ll have an organization on there — that’s facilitating sexual exploitation. We try to have a variety of industries represented and we also try to have a variety of issues of sexual exploitation represented on the list as well. At NCOSE, one of our primary messages is that all forms of sexual exploitation are inherently connected to one another. We can’t solve child sexual abuse without talking about sexual violence, prostitution — all of these things feed into one another. We need to have a holistic solution. That’s why we try to have the dirty dozen list cover a wide variety of issues.

Looking at the list this year, probably 50 percent are media companies or websites. STEAM. Twitter. ROKU. Netflix. HBO. Why did these websites and platforms make the list?

We’ve got many companies, social media are on there, media/entertainment centers are on there. This is tough because I do them all and they’re all very different.

As far as HBO and Netflix, those are maybe the two most similar ones on our list. With them, we’re seeing that there’s really a lack of awareness and social responsibility in the media about how we portray sexual exploitation issues. For example, gratuitous scenes of sexual violence. HBO’s Game of Thrones has shown several rape scenes. There have been rape scenes on 13 Reasons Why on Netflix. In addition, both of these sites have either normalized or minimized issues like sex trafficking. Netflix has come under fire because they’ve now had at least two instances where they either portrayed underaged nudity or child eroticization and so we’re seeing that the media has a heavy role to play in our culture and when media is portraying these issues irresponsibly, it not only decreases empathy for victims, it sometimes eroticizes sexual violence or sends incorrect messages about the harms of commercial sexual exploitation.

What have you found on sites like Twitter, Steam, and Google?

For example, on Twitter, we know that not only is there a plethora of pornography, but there is a very real reality that people are using Twitter to facilitate sex trafficking and prostitution. Not only through direct messaging but also through finding each other on hashtags, through sharing photographs and videos. So the fact that Twitter is being used to facilitate sex trafficking and pornography is incredibly problematic.

On YouTube, everything came out this week about the #WakeUpYouTube scandal and how there are hordes of videos of young girls that are being eroticized by essentially pedophiles or child abusers in the comment sections, trading contact information with each other to network, and trying to get contact information from the girls so they can follow up with them and groom them on social media, supposedly for more sexual abuse. That is happening on Twitter as well. We’re seeing these large internet platforms just aren’t taking enough responsibility to address these very serious problems.

What do you think needs to happen?

YouTube right now is mostly relying on users to actually flag harmful content, and then YouTube has human eyes reviewing hours and hours of videos which are uploaded every minute. The same is true with Twitter. Millions of people are tweeting constantly. For them to have these really outdated systems is irresponsible. So, we think they need to take a more proactive approach in how their algorithms work, using better AI. They need to actually make dealing with sexual exploitation a priority, because right now it’s not. Right now they’re happy that people are commenting and that videos have high views and they are looking at the corporate profit bottom-line instead of, actually, the health and safety of their users. Right now, they’re okay with those types of messages proliferating as long as it doesn’t rise to the level of literal child pornography — but, that’s such a low bar. People are being groomed for abuse. They are being bought and sold on their platforms with or without actual child pornography being shared. That should not be the standard.

So, engagement is the bottom line for media companies. Whatever that engagement might mean probably isn’t relevant to YouTube unless they get called out on it. But this isn’t a new problem, right? This has been around since 2017.

That’s really the trend with YouTube. And it’s the trend with Twitter, and pretty much all of these companies. They’ll get slammed in the media, maybe they’ll do a little bit of cleanup for a short period of time around those specific videos they’re being called out about and then they just wait for us to forget and they go on with business as usual.

I saw EBSCO was on the Dirty Dozen list. EBSCO, in part, serves kids in k-12 schools. Is this information platform for research being called out for having comprehensive sex education? Is it sending school-aged kids to data on that? Or is it sending them to legit porn?

NCOSE does not get involved in sex education debates. We don’t have a bone or dog in that fight. So with EBSCO, that is in thousands of schools. There is actually pornography in the platform, including live links to pornography.

How does that even happen?

Yeah. It’s crazy. They have a lot of PDFs, and in the PDFs, they’ll have hardcore links. In early October 2018 we went through EBSCO and found live links to BDSM pornography sites, live links to very violent pornography sites, and the problem is that EBSCO is not recognizing that their ultimate user is children. It’s children that use their platforms. EBSCO, the whole point of EBSCO — this is the important thing — the whole point of having an educational database in our school is that it is safe, age appropriate, educational information. So if EBSCO can’t clean up its database in order to be that, then we might as well just put all these kids on Google. What’s the point of having an educational database unless it’s actually providing what they say they are?

That’s nuts. I would imagine it should be a closed, safe information system. When I went to college, we had JSTOR. That is only filled with verified academic materials. I would imagine EBSCO would be even more strict, especially when used for K-12 kids.

Yeah, they have a list of publishers that they work with. They take their content. Those publishers at least have included media outlets like Cosmopolitan Magazine and Men’s Health, which regularly have articles about pornography, normalizing prostitution, and about encouraging people to engage in sexting. That’s not educational information, particularly that a kid in elementary school needs to be accessing. The problem is they put up everything, at least from what I’ve seen, it appears they just put up everything publishers are sending in.

Granted, they’ve made a lot of progress. This is EBSCO’s third year on the Dirty Dozen list, and when we were first working with them, I could type in the word “respiration” or “7th grade biology” and from that search you would be able to find hardcore pornography. That has now been greatly fixed. Their elementary school database is particularly improved, but their high school database still has a ways to go and has some work that needs to be done.

In terms of EBSCO, it makes sense to me that with Twitter and Netflix, that you can log onto these things and see what’s happening quickly. How did you discover this about this platform?

We started hearing about EBSCO from parents around the country, because children were stumbling across this content. And really, parents are horrified when they realize this is a problem, and they should be. If EBSCO isn’t going to clean up their services then they should at least warn parents that their kids are probably going to be exposed to graphic content.

What about Steam? That’s an online video game platform that a ton of 18 and under kids use. What goes on on that platform that is problematic?

Steam was on the Dirty Dozen list in 2018. They first came to our attention because there was a rape-themed game called “House Party,” where the entire theme of the game is to walk into a house and have sex with all the women who are there and you do this through getting them drunk, sometimes lying to them, sometimes blackmailing them. There are different categories. Not only is that bad in and of itself, but it was animated pornography that included penetrative sex acts.

That’s what got Steam onto our list. As we looked, we saw they had a large number, an increasing number of games similar to that. We had some progress. They started to remove some of these games. But then, in June of 2018, they flipped and made a new policy to allow everything onto the Steam store except for things that were illegal. When that happened, the number of games that were tagged for nudity or sexual content doubled from 700 games to 1400 in just over four months. Now there are over 2,000 games with this tag. So, not only is Steam facilitating the increased trend of pornified video games, which often gamify sexual violence and coercion, but also their filtering and parental controls are basically non-existent, even with the filters on you’re just a few clicks away from being able to access pornographic or sexually graphic games.

What can YouTube do? What can any of these platforms really do?

For each target, we write them a letter and explain to them the reason they’re on the dirty dozen list and we ask them for specific improvements. For YouTube, one of the biggest things is we just want them to be more proactive in how they are policing their site. They need to use AI, update their algorithms. They already have algorithms that can detect when a number of predatory comments are being made on a video.

About a year ago or so, there was a YouTube announcement where they said one of the ways they were getting tougher to protect families on YouTube was by disabling comments on videos where those comments were becoming increasingly predatory. I did a deep dive into the YouTube scandal this last week and pulled together proof of what was going on. The fact that a lot of those videos have the comments disabled means that the YouTube algorithm recognizes that there were a lot of predatory comments happening.

But the only action they took was to disable the comments. They still left the video up — videos of very young girls, 9 or 11 years old, being eroticized by men. They got hundreds of thousands of views. I saw multiple videos with two million views. That’s putting that child at risk. Even if you are disabling the comments. They need to take a more proactive approach to keep people on their platform safe instead of just prioritizing engagement.

Some of the kids in these videos are clearly under 13. Even when I was a kid, I lied about being 13 to get on the Internet.

Yeah, kids just lie. These social media companies need to be more conscious about their platforms, and not less conscious about their platforms.