Parents Will Be Alerted if Kids Give or Receive Nude Photos in Next iPhone IOS

It's meant to protect kids from online sexual predators.

Beginning with iOS 15.2, parents will have the ability to turn on a feature that will let them snoop on their kids, alerting them when their kids send or receive sexually explicit photographs. The feature is meant to protect kids from online sexual predators, but it’s not arriving without controversy.

Here’s what parents whose kids have iPhones can expect from the new feature—and what all parents should know about this new front in both the battle against Child Sexual Abuse Material (CSAM) and the debate over online privacy.

How does it work?

Put simply, Apple’s technology converts images to numbers (known as hashes) based on the specific features of the image. It’s designed to ensure that different images produce different hashes while identical and visually similar images produce the same hash.

When a child is sent or tries to send a photograph, on-device machine learning assigns a hash to that image and compares it to the hashes generated using databases of child sexual abuse material maintained by the National Center for Missing and Exploited Children.

What exactly does it do?

CSAM scanning will be turned off by default, leaving it up to parents whether or not to activate it for the kids’ phones on their Family Sharing plan. If they do, here’s what happens.

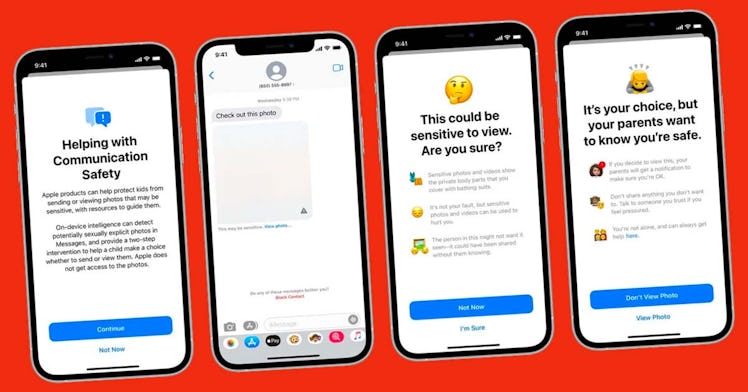

iOS will automatically blur out potentially sexually explicit photos sent to kids’ phones. Kids who tap on those photos to try to view them will receive an alert that asks them if they’re sure with warnings that it could contain “private body parts that you cover with bathing suits,” that it might be used to hurt kids, and that it might have been sent without the knowledge of the person in the photo. If kids indicate that they still want to see it, another screen pops up letting them know that their parents will be notified.

A similar set of warnings, including warning kids of parental notification, will pop up if kids try to send sexually explicit photos.

What are the privacy concerns?

This feature was supposed to be included in iOS 15, which was released on September 20, but it was delayed after privacy advocates sounded the alarm. The principal argument against deploying the technology is summarized in a recent paper on the subject.

“Even if deployed initially to scan for child sex-abuse material, content that is clearly illegal, there would be enormous pressure to expand its scope,” the authors wrote. “We would then be hard-pressed to find any way to resist its expansion or to control abuse of the system.”

Essentially, the tech would create a door into people’s phones that could be used for nefarious purposes, from governments targeting political dissidents to abusive spouses spying on their partners. Apple has consistently said that it would resist any such expansion, and some children’s advocates have applauded the company’s efforts to keep kids safe.

Time will tell which side was correct, but in the meantime parents who’ve given their kids iPhones will have the option to activate this feature when iOS 15.2 is released, likely before the end of the year.